I previously found the .NET version faster with the GTX1650, but I haven't had time to test it yet with this version of CPAI. Your results will be interesting, but I need to get some days of data and use Excel pivot tables to tease out the truth.

As for the stability of this BI5 pc, it was a long hard fight. The entire story was lost in the great forum crash, but basically I found:

1. A satellite receiver card that periodically blocked USB ports.

2. A power supply that glitched occassionally,

3. A RAM stick that failed when the psu was OK,

4. A driver for my second NIC that was so old it prevented Win11 installing.

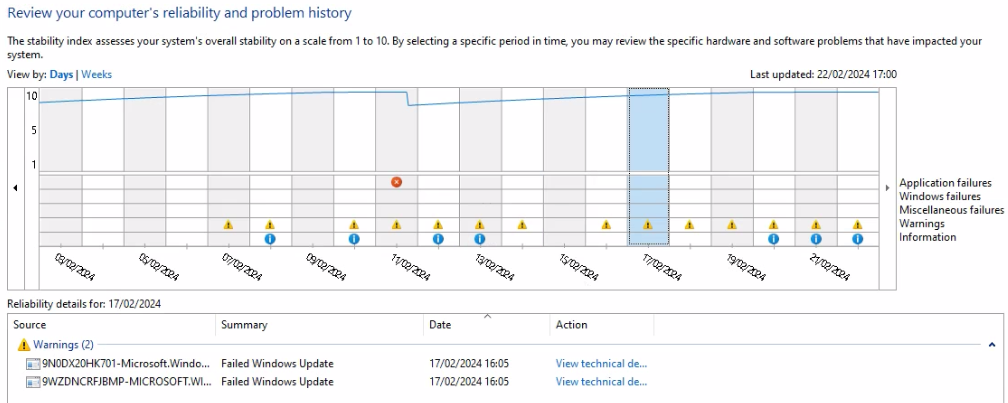

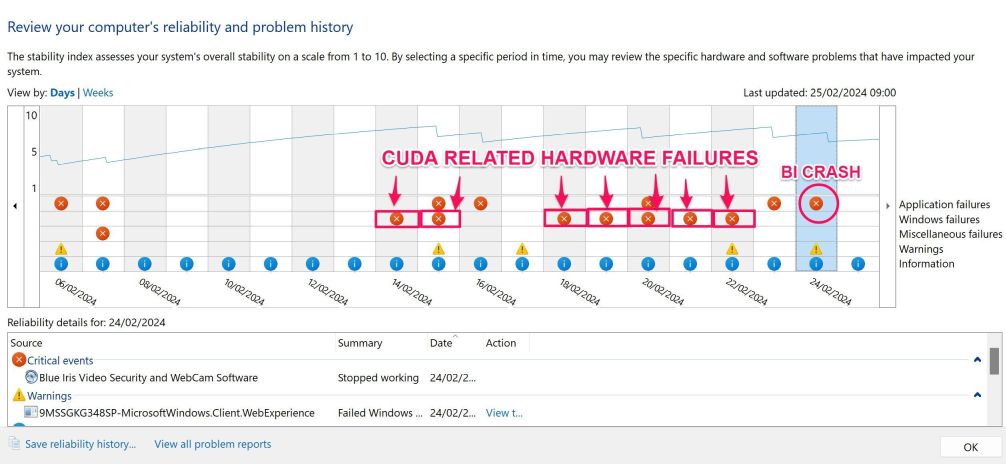

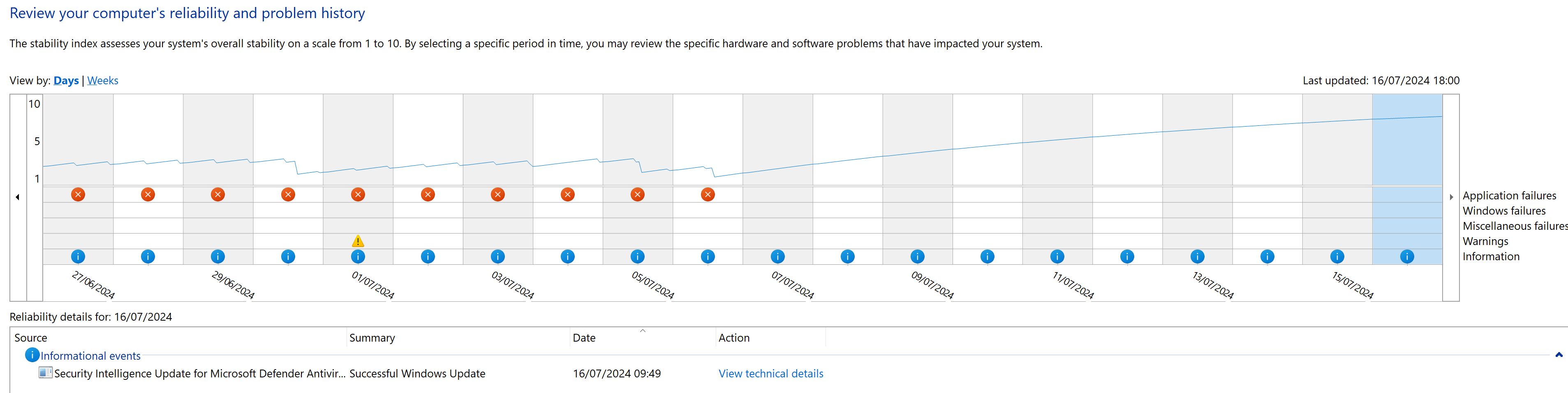

My biggest ally was "Reliability History" in Win10 and onwards. Well worth a look if you haven't seen it before:

I lock down Windows updates for the maximum period (until I have time to fix whatever breaks), but it looks like MS are trying to push some through. I'll check it this weekend; it's now the main reason I reboot that pc !

CUDA Errors with YOLOv5 Object Detection on CPAI 2.5.x – Seeking Insights

Re: CUDA Errors with YOLOv5 Object Detection on CPAI 2.5.x – Seeking Insights

Forum Moderator.

Problem ? Ask and we will try to assist, but please check the Help file.

Problem ? Ask and we will try to assist, but please check the Help file.

Re: CUDA Errors with YOLOv5 Object Detection on CPAI 2.5.x – Seeking Insights

I wish to convey my admiration for the state of your reliability monitor; it truly stands out for its impressive performance.

My setup, however, has encountered challenges, particularly following a Blue Iris crash yesterday, which I believe was triggered by my balcony camera freezing, necessitating a restart of the application.

Upon reviewing the reliability monitor, I've noted several Blue Iris crashes, which underline persistent instability issues tied to CPAI and CUDA errors, as illustrated below.

Adopting Yolo.NET (DirectML) has, fortunately, led to a crash-free experience in relation to the GPU, puzzling me about the underlying causes of CUDA's instability. DirectML has not crashed since:

This experience has solidified my decision to continue using Yolo.NET moving forward.

The switch to .NET has not only stabilised the system but also seems to have slightly improved inference times. When comparing performance, the medium model under .NET appears to either match or slightly outperform CUDA's speed with a small model, which is quite fascinating considering CUDA's hardware specificity. However, faced with CUDA's consistent failure after 11 hours of operation, I find myself with no choice but to adopt DirectML.

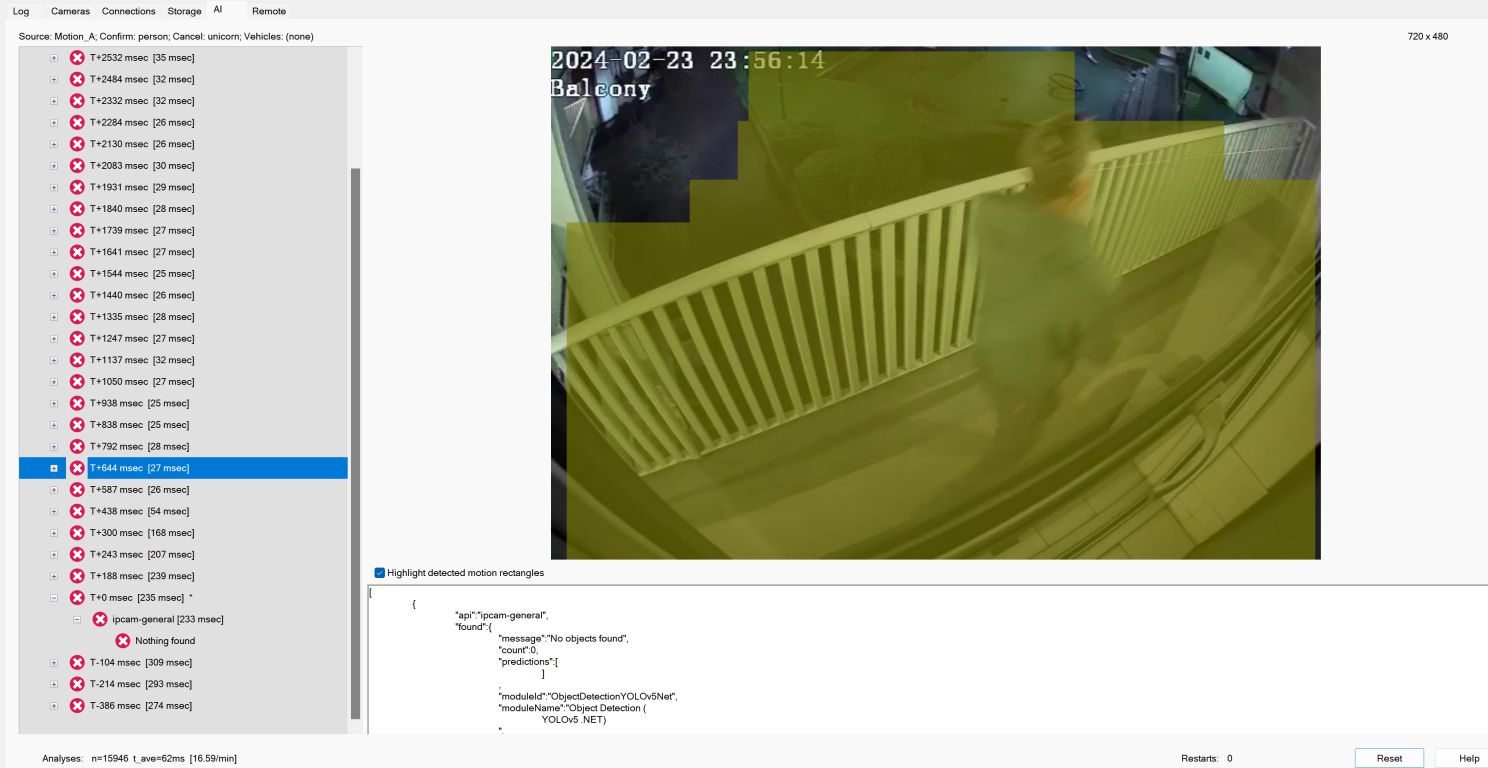

An intriguing point I've observed, albeit not definitively linked to .NET, is a detectable inconsistency in CPAI's object detection capabilities, as highlighted below:

Despite being in the frame under strict AI settings (Night Profile: Min Confidence 60%, Pre-Trigger images: 3, Post-Trigger images: 30, Analyse one image every 100ms), the system failed to detect me in that instance. Strangely, it had successfully detected me when I returned home yesterday evening in the same lighting conditions etc. This inconsistency is bewildering, especially given the meticulously tuned settings aimed at ensuring maximum detection reliability.

The transition from CUDA to DirectML may have influenced the frequency of detections, a shift from the previously uninterrupted detection performance. This change could either be coincidental or a minor glitch that happened to coincide with the move from CUDA to DirectML.

Sharing this concern seemed necessary as it continues to merit further attention.

In my quest for solutions, I stumbled upon a recent topic on here suggesting that "unicorn" is now obsolete. Also, after further digging I found elsewhere that it was suggested adjusting the global AI tab in the camera settings. By entering "Nothing found:0" in the "To cancel" box, it effectively removes "Nothing found" alerts (marked green) from the Confirmed alerts list, compelling the AI to review all images within an alert to identify the best one. Sounds very similar to the Unicorn trick?

It does make me wonder if replacing "unicorn" with "Nothing Found:0" in the cancel field might compel the AI to re-examine the images and successfully identify the object, or is this wrongly assumed ?

I'll conclude my observations here, as I've shared a substantial amount and have other concerns to address in separate posts.

To recap, I am determined to continue utilising the reliability monitor to address any persisting errors and enhance the score as much as possible for system stability purposes and I really need to figure out why CPAI fails to detect sometimes as per the example above.

Regards

SN

My setup, however, has encountered challenges, particularly following a Blue Iris crash yesterday, which I believe was triggered by my balcony camera freezing, necessitating a restart of the application.

Upon reviewing the reliability monitor, I've noted several Blue Iris crashes, which underline persistent instability issues tied to CPAI and CUDA errors, as illustrated below.

Adopting Yolo.NET (DirectML) has, fortunately, led to a crash-free experience in relation to the GPU, puzzling me about the underlying causes of CUDA's instability. DirectML has not crashed since:

This experience has solidified my decision to continue using Yolo.NET moving forward.

The switch to .NET has not only stabilised the system but also seems to have slightly improved inference times. When comparing performance, the medium model under .NET appears to either match or slightly outperform CUDA's speed with a small model, which is quite fascinating considering CUDA's hardware specificity. However, faced with CUDA's consistent failure after 11 hours of operation, I find myself with no choice but to adopt DirectML.

An intriguing point I've observed, albeit not definitively linked to .NET, is a detectable inconsistency in CPAI's object detection capabilities, as highlighted below:

Despite being in the frame under strict AI settings (Night Profile: Min Confidence 60%, Pre-Trigger images: 3, Post-Trigger images: 30, Analyse one image every 100ms), the system failed to detect me in that instance. Strangely, it had successfully detected me when I returned home yesterday evening in the same lighting conditions etc. This inconsistency is bewildering, especially given the meticulously tuned settings aimed at ensuring maximum detection reliability.

The transition from CUDA to DirectML may have influenced the frequency of detections, a shift from the previously uninterrupted detection performance. This change could either be coincidental or a minor glitch that happened to coincide with the move from CUDA to DirectML.

Sharing this concern seemed necessary as it continues to merit further attention.

In my quest for solutions, I stumbled upon a recent topic on here suggesting that "unicorn" is now obsolete. Also, after further digging I found elsewhere that it was suggested adjusting the global AI tab in the camera settings. By entering "Nothing found:0" in the "To cancel" box, it effectively removes "Nothing found" alerts (marked green) from the Confirmed alerts list, compelling the AI to review all images within an alert to identify the best one. Sounds very similar to the Unicorn trick?

It does make me wonder if replacing "unicorn" with "Nothing Found:0" in the cancel field might compel the AI to re-examine the images and successfully identify the object, or is this wrongly assumed ?

I'll conclude my observations here, as I've shared a substantial amount and have other concerns to address in separate posts.

To recap, I am determined to continue utilising the reliability monitor to address any persisting errors and enhance the score as much as possible for system stability purposes and I really need to figure out why CPAI fails to detect sometimes as per the example above.

Regards

SN

Re: CUDA Errors with YOLOv5 Object Detection on CPAI 2.5.x – Seeking Insights

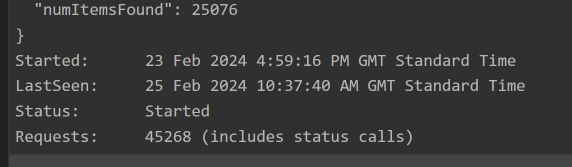

Just wanted to drop a quick update; fingers crossed I'm not jinxing it by saying this too soon, but it looks like the CPAI 2.5.6 update has sorted out those CUDA memory access issues and bombing out after 10-12 hours.

After giving it some thought, I’ve realised CUDA is actually a bit snappier than DirectML. It's not a huge difference, just about 10ms (maybe even a little faster), but every little helps.

So far, so good – the system has been running smoothly without a hitch for the last 24 hours.

Module 'Object Detection (YOLOv5 6.2)' 1.9.1 (ID: ObjectDetectionYOLOv5-6.2)

Valid: True

Module Path: <root>\modules\ObjectDetectionYOLOv5-6.2

AutoStart: True

Queue: objectdetection_queue

Runtime: python3.7

Runtime Loc: Shared

FilePath: detect_adapter.py

Pre installed: False

Start pause: 1 sec

Parallelism: 0

LogVerbosity:

Platforms: all,!raspberrypi,!jetson

GPU Libraries: installed if available

GPU Enabled: enabled

Accelerator:

Half Precis.: enable

Environment Variables

APPDIR = <root>\modules\ObjectDetectionYOLOv5-6.2

CUSTOM_MODELS_DIR = <root>\modules\ObjectDetectionYOLOv5-6.2\custom-models

MODELS_DIR = <root>\modules\ObjectDetectionYOLOv5-6.2\assets

MODEL_SIZE = Medium

USE_CUDA = True

YOLOv5_AUTOINSTALL = false

YOLOv5_VERBOSE = false

Status Data: {

"inferenceDevice": "GPU",

"inferenceLibrary": "CUDA",

"canUseGPU": "true",

"successfulInferences": 51402,

"failedInferences": 9,

"numInferences": 51411,

"averageInferenceMs": 45.582642698727675

}

Started: 02 Mar 2024 11:51:32 AM GMT Standard Time

LastSeen: 03 Mar 2024 12:25:46 PM GMT Standard Time

Status: Started

Requests: 51412 (includes status calls)

After giving it some thought, I’ve realised CUDA is actually a bit snappier than DirectML. It's not a huge difference, just about 10ms (maybe even a little faster), but every little helps.

So far, so good – the system has been running smoothly without a hitch for the last 24 hours.

Module 'Object Detection (YOLOv5 6.2)' 1.9.1 (ID: ObjectDetectionYOLOv5-6.2)

Valid: True

Module Path: <root>\modules\ObjectDetectionYOLOv5-6.2

AutoStart: True

Queue: objectdetection_queue

Runtime: python3.7

Runtime Loc: Shared

FilePath: detect_adapter.py

Pre installed: False

Start pause: 1 sec

Parallelism: 0

LogVerbosity:

Platforms: all,!raspberrypi,!jetson

GPU Libraries: installed if available

GPU Enabled: enabled

Accelerator:

Half Precis.: enable

Environment Variables

APPDIR = <root>\modules\ObjectDetectionYOLOv5-6.2

CUSTOM_MODELS_DIR = <root>\modules\ObjectDetectionYOLOv5-6.2\custom-models

MODELS_DIR = <root>\modules\ObjectDetectionYOLOv5-6.2\assets

MODEL_SIZE = Medium

USE_CUDA = True

YOLOv5_AUTOINSTALL = false

YOLOv5_VERBOSE = false

Status Data: {

"inferenceDevice": "GPU",

"inferenceLibrary": "CUDA",

"canUseGPU": "true",

"successfulInferences": 51402,

"failedInferences": 9,

"numInferences": 51411,

"averageInferenceMs": 45.582642698727675

}

Started: 02 Mar 2024 11:51:32 AM GMT Standard Time

LastSeen: 03 Mar 2024 12:25:46 PM GMT Standard Time

Status: Started

Requests: 51412 (includes status calls)

Re: CUDA Errors with YOLOv5 Object Detection on CPAI 2.5.x – Seeking Insights

After so long, I've finally managed to resolve things! Seems I've identified issues that are now sorted out. We're headed to the topside!TimG wrote: ↑Thu Feb 22, 2024 6:35 pm I previously found the .NET version faster with the GTX1650, but I haven't had time to test it yet with this version of CPAI. Your results will be interesting, but I need to get some days of data and use Excel pivot tables to tease out the truth.

As for the stability of this BI5 pc, it was a long hard fight. The entire story was lost in the great forum crash, but basically I found:

1. A satellite receiver card that periodically blocked USB ports.

2. A power supply that glitched occassionally,

3. A RAM stick that failed when the psu was OK,

4. A driver for my second NIC that was so old it prevented Win11 installing.

My biggest ally was "Reliability History" in Win10 and onwards. Well worth a look if you haven't seen it before:

Screenshot 2024-02-22 182915.png

I lock down Windows updates for the maximum period (until I have time to fix whatever breaks), but it looks like MS are trying to push some through. I'll check it this weekend; it's now the main reason I reboot that pc !

- Attachments

-

- Tim.jpg (233.52 KiB) Viewed 823 times

Re: CUDA Errors with YOLOv5 Object Detection on CPAI 2.5.x – Seeking Insights

Looking good !

With "Reliability History" I had used a program called "WhoCrashed" and went down that rabbit hole. People at the time said they would have thrown it out of a window due to those multiple failure modes

With "Reliability History" I had used a program called "WhoCrashed" and went down that rabbit hole. People at the time said they would have thrown it out of a window due to those multiple failure modes

Forum Moderator.

Problem ? Ask and we will try to assist, but please check the Help file.

Problem ? Ask and we will try to assist, but please check the Help file.